Appearance

调用链-SkyWalking

1.1.SkyWalking-agent集成说明

插件分类说明:

| rpc远程调用链路所需agent插件 | 是否必选 |

|---|---|

| apm-asynchttpclient-2.x-plugin-8.6.0.jar | 是 |

| apm-feign-default-http-9.x-plugin-8.6.0.jar | 是 |

| apm-httpasyncclient-4.x-plugin-8.6.0.jar | 是 |

| apm-httpClient-4.x-plugin-8.6.0.jar | 是 |

| apm-httpclient-commons-8.6.0.jar | 是 |

| apm-hystrix-1.x-plugin-8.6.0.jar | 是 |

| apm-okhttp-4.x-plugin-8.6.0.jar | 是 |

| apm-okhttp-common-8.6.0.jar | 是 |

| 容器所需agent插件 | 是否必选(容器2选1) |

|---|---|

| apm-undertow-2.x-plugin-8.6.0.jar | 项目使用undertow容器必选 |

| tomcat-7.x-8.x-plugin-8.6.0.jar | 项目使用tomcat容器必选 |

| 网关链路所需agent插件 | 是否必选 |

|---|---|

| apm-spring-cloud-gateway-2.1.x-plugin-8.6.0.jar | comet-gateway项目必选 |

| apm-spring-webflux-5.x-plugin-8.6.0.jar | comet-gateway项目必选 |

| apm-netty-socketio-plugin-8.6.0.jar | comet-gateway项目必选 |

| spring异步调用相关链路所需agent插件 | 是否必选 |

|---|---|

| apm-spring-async-annotation-plugin-8.6.0.jar | 否 |

| 事务相关链路所需agent插件 | 是否必选 |

|---|---|

| apm-spring-tx-plugin-8.6.0.jar | 否 |

| 数据库相关链路所需agent插件 | 是否必选 |

|---|---|

| apm-jdbc-commons-8.6.0.jar | 否 |

| apm-oracle-10.x-plugin-2.2.0-SNAPSHOT.jar | 否 |

| apm-mysql-8.x-plugin-8.6.0.jar | 否 |

| apm-mysql-commons-8.6.0.jar | 否 |

| 堆栈相关链路所需agent插件 | 是否必选 |

|---|---|

| apm-spring-annotation-plugin-8.6.0.jar | 否 |

| Rocketmq相关链路所需agent插件 | 是否必选 |

|---|---|

| apm-rocketmq-4.x-plugin-8.6.0.jar | 否 |

| scheduled相关链路所需agent插件 | 是否必选 |

|---|---|

| apm-spring-scheduled-annotation-plugin-8.6.0.jar | 否 |

| apm-quartz-scheduler-2.x-plugin-8.6.0.jar | 否 |

| 链路忽略所需agent插件 | 是否必选 |

|---|---|

| apm-trace-ignore-plugin-8.6.0.jar | 否 |

插件使用说明:

**默认agent/plugins下面只有采集RPC链路和网关相关的插件包,别的包统一放到agent/ptional-plugins目录下,如需增加相关链路,将相关插件包移入agent/plugins目录下即可。 **

配置链路忽略时,有俩种方式配置:

配置文件:

在agent/config下创建apm-trace-ignore-plugin.config文件,内容如下:

properties

# 忽略路径数据上传

# 路径匹配规则

# /path/? 单个字符

# /path/* 多个字符

# /path/** 多个字符和多级路径

# 多个规则使用逗号“,”分割

trace.ignore_path=${SW_AGENT_TRACE_IGNORE_PATH:/lhdmon/**,Lettuce/**,/actuator/**,Oracle/**,/eureka/**}启动参数:

properties

-javaagent:../skywalking-agent.jar=agent.service_name=gateway-dev3,trace.ignore_path='/lhdmon/**,Lettuce/**,/actuator/**,Oracle/**,/eureka/**'

或者

-Dskywalking.trace.ignore_path=/lhdmon/**,Lettuce/**,/actuator/**,Oracle/**,/eureka/**应用集成skywalking-agent

将应用与skywalking-agent部署到同一台服务器,并在启动脚本中添加如下内容,配置相关参数后启动:

shell

######################skywalking##########################

#skywalking-agent.jar包路径,根据实际环境修改

SKYWALKING_AGENT="/app/dcits/app-run/agent/skywalking-agent.jar"

#注册到skywalking的服务名称,根据实际环境修改

SKYWALKING_AGENT_SERVICE_NAME="ensemble-cif-service"

#skywalking接收agent发送采集数据的服务及端口,根据实际环境修改

SKYWALKING_COLLECTOR_BACKEND_SERVICE=192.168.161.116:11800

SKYWALKING_AGENT_OPTS=""

if [ -f "$SKYWALKING_AGENT" ]; then

SKYWALKING_AGENT_OPTS="$SKYWALKING_AGENT_OPTS -javaagent:$SKYWALKING_AGENT"

fi

if [ -n "$SKYWALKING_AGENT_SERVICE_NAME" ]; then

SKYWALKING_AGENT_OPTS="$SKYWALKING_AGENT_OPTS -Dskywalking.agent.service_name=$SKYWALKING_AGENT_SERVICE_NAME"

fi

if [ -n "$SKYWALKING_COLLECTOR_BACKEND_SERVICE" ]; then

SKYWALKING_AGENT_OPTS="$SKYWALKING_AGENT_OPTS -Dskywalking.collector.backend_service=$SKYWALKING_COLLECTOR_BACKEND_SERVICE"

fi

echo "Using SKYWALKING_AGENT_OPTS: $SKYWALKING_AGENT_OPTS"

######################skywalking##########################将SKYWALKING_AGENT_OPTS变量添加到java -jar 命令中,如下:

shell

nohup java ${JAVA_MEM_OPTS} ${JAVA_DEBUG_OPTS} ${SKYWALKING_AGENT_OPTS} ${JAVA_JMX_OPTS} ${GC_OPT} ${HEAP_DUMP_OPTS} -jar -Dspring.profiles.active=$ENV_CONFIG ${BASE_PATH}/${APPLICATION_JAR} > ${LOG_PATH} 2>&1 &1.2.skywalking日志集成现有ELK日志查询

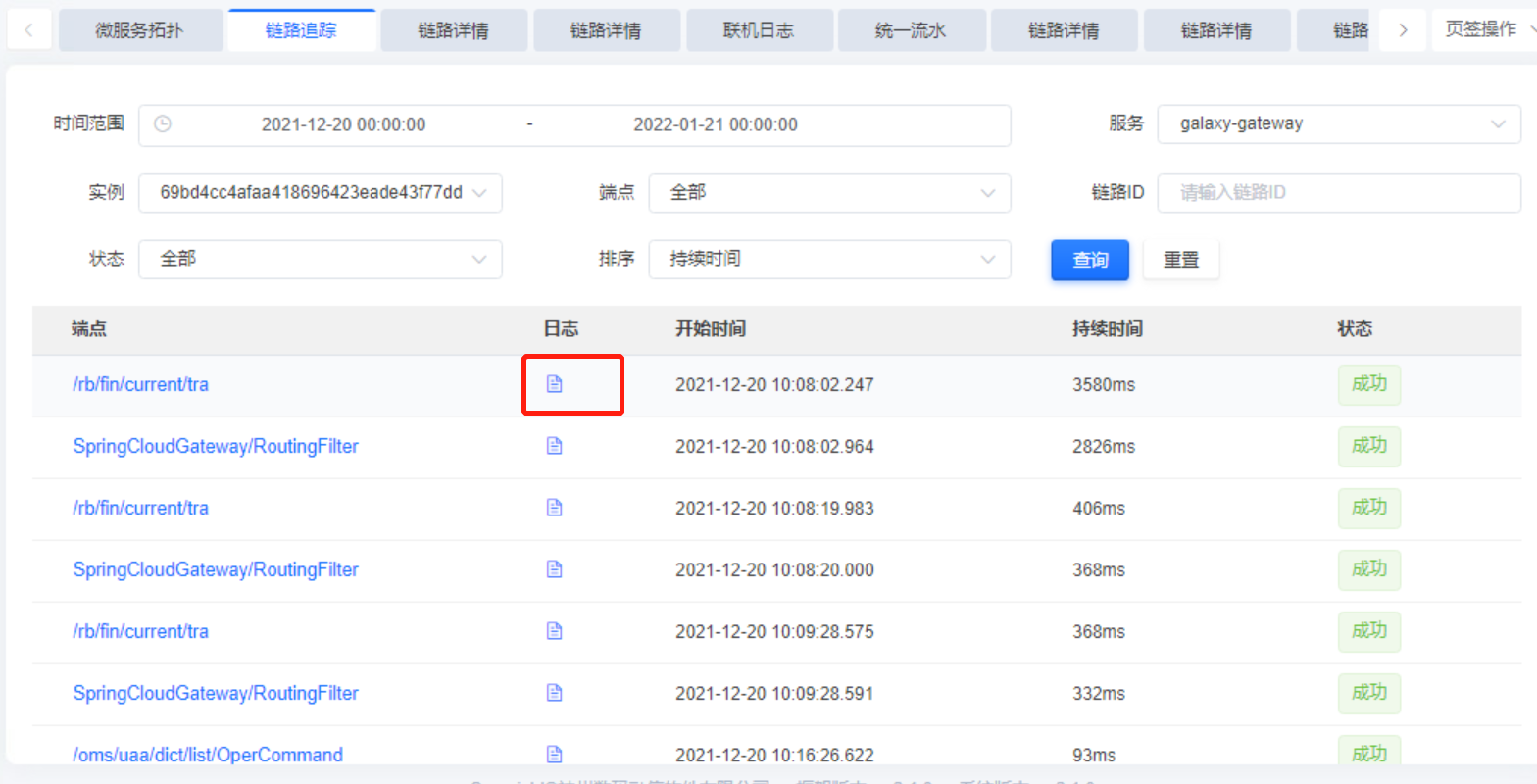

运维平台使用了sky walking调用链之后,为了查询skywalking中的链路日志,所以将skywalking的链路日志和运维平台原来的日志查询进行了整合,在链路追踪中,点击日志按钮即可根据链路的traceId查询此链路的日志。

集成步骤

此功能需要业务集成skywalking的日志相关jar包,具体集成步骤如下:

(1)pom文件中引入以下依赖

xml

<dependency>

<groupId>com.dcits.jupiter</groupId>

<artifactId>jupiter-log</artifactId>

</dependency>(2)修改logback.xml配置(共有两种方式,核心业务建议使用第二种)

第一种方式:在应用的 logback.xml中引入jupiter-log包中的jupiter-logback.xml 并在日志输出时进行打印

jupiter-logback.xml示例:

xml

<?xml version="1.0" encoding="UTF-8"?>

<included>

<!-- ip使用自定义类去查 -->

<conversionRule conversionWord="ip" converterClass="com.dcits.jupiter.log.common.LogIpConfig"/>

<springProperty scope="context" name="port" source="server.port" defaultValue="8080"/>

<springProperty scope="context" name="serviceName" source="spring.application.name"/>

<springProperty scope="context" name="tenantId" source="galaxy.tenantId"/>

<springProperty scope="context" name="dataCenter" source="galaxy.dataCenter"/>

<springProperty scope="context" name="profile" source="galaxy.profile"/>

<springProperty scope="context" name="logicUnitId" source="galaxy.logicUnitId"/>

<springProperty scope="context" name="phyUnitId" source="galaxy.phyUnitId"/>

<springProperty scope="context" name="logback.dir" source="logging.file.path" defaultValue="./logs"/>

<springProperty scope="context" name="logback.maxHistory" source="logging.file.max-history" defaultValue="14"/>

<springProperty scope="context" name="logback.totalSizeCap" source="logging.file.total-size-cap"

defaultValue="30GB"/>

<springProperty scope="context" name="logback.maxFileSize" source="logging.file.max-size" defaultValue="10MB"/>

<property name="JUPITER_LOG_PATTERN"

value="#[%d{yyyy-MM-dd HH:mm:ss,SSS}] [%ip:${port}:${tenantId}:${profile}:${serviceName}:${logicUnitId}:${phyUnitId}:%X{J-TraceId:-}:%X{J-SpanId:-}:%X{J-SpanId-Pre:-}] [%thread] %-5level %logger{86}.%M:%L - %msg%n"/>

</included>第二种方式:修改现有的logback-spring.xml

示例:

xml

<?xml version="1.0" encoding="UTF-8"?>

<configuration scan="true" scanPeriod="10 seconds" debug="false">

<contextName>logback</contextName>

<!-- 日志输出的ip -->

<conversionRule conversionWord="ip" converterClass="com.dcits.jupiter.log.common.LogIpConfig"/>

<!-- 获取微服务的spring.application.name -->

<springProperty scop="context" name="spring.application.name" source="spring.application.name"

defaultValue="ensemble-cif-service"></springProperty>

<!-- 微服务端口号 -->

<springProperty scope="context" name="port" source="server.port" defaultValue="8080"/>

<!-- 日志输出格式,必须打印出skywalking调用链的traceId,如下"%tId"就是traceId -->

<property name="logging.pattern"

value="#%d{yyyy-MM-dd HH:mm:ss.SSS} [${spring.application.name}] [%ip:${port},%tid,%X{channelSeqNo},%X{jobRunId},%X{stepRunId}] [%X{serviceCode}-%X{messageType}-%X{m

essageCode}] [%thread] %-5level %logger{50} %line - %msg%n"/>

<appender name="console" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>${logging.pattern}</pattern>

</encoder>

</appender>

</configuration>核心ensemble-cif-service服务修改好的logback-spring.xml示例:

xml

<?xml version="1.0" encoding="UTF-8"?>

<configuration scan="true" scanPeriod="10 seconds" debug="false">

<contextName>logback</contextName>

<conversionRule conversionWord="ip" converterClass="com.dcits.jupiter.log.common.LogIpConfig"/>

<springProperty scop="context" name="spring.application.name" source="spring.application.name"

defaultValue="ensemble-cif-service"></springProperty>

<!--定义日志文件的存储地址 勿在 LogBack 的配置中使用相对路径-->

<springProperty scope="context" name="port" source="server.port" defaultValue="8080"/>

<property name="logging.path" value="../../logs"/>

<property name="logging.maxHistory" value="30"/>

<property name="logging.maxFileSize" value="10MB"/>

<property name="logging.totalSizeCap" value="100GB"/>

<property name="logging.pattern"

<!--日志脱敏 -->

<conversionRule conversionWord="msg" converterClass="com.dcits.comet.log.SensitiveDataConverter"/>

<!--输出到控制台-->

<appender name="console" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>#%d{yyyy-MM-dd HH:mm:ss.SSS} [${spring.application.name}]

[%ip:${port},%X{channelSeqNo},%X{jobRunId},%X{stepRunId}] [%X{serviceCode}-%X{messageType}-%X{m

essageCode}] [%thread] %-5level %logger{50} %line - %msg%n

</pattern>

<charset>UTF-8</charset>

</encoder>

</appender>

<!--输出到文件-->

<appender name="file" class="ch.qos.logback.core.rolling.RollingFileAppender">

<rollingPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedRollingPolicy">

<fileNamePattern>

${logging.path}/${spring.application.name}/%d{yyyyMMdd}/%d{yyyyMMdd}.%i.ensemble-cif-service.log

</fileNamePattern>

<maxHistory>${logging.maxHistory}</maxHistory>

<maxFileSize>${logging.maxFileSize}</maxFileSize>

<totalSizeCap>${logging.totalSizeCap}</totalSizeCap>

</rollingPolicy>

<encoder class="ch.qos.logback.core.encoder.LayoutWrappingEncoder">

<layout class="org.apache.skywalking.apm.toolkit.log.logback.v1.x.TraceIdPatternLogbackLayout">

<pattern>${logging.pattern}</pattern>

</layout>

</encoder>

</appender>

<?xml version="1.0" encoding="UTF-8"?>

<configuration scan="true" scanPeriod="10 seconds" debug="false">

<contextName>logback</contextName>

<conversionRule conversionWord="ip" converterClass="com.dcits.jupiter.log.common.LogIpConfig"/>

<springProperty scop="context" name="spring.application.name" source="spring.application.name"

defaultValue="ensemble-cif-service"></springProperty>

<!--定义日志文件的存储地址 勿在 LogBack 的配置中使用相对路径-->

<springProperty scope="context" name="port" source="server.port" defaultValue="8080"/>

<property name="logging.path" value="../../logs"/>

<property name="logging.maxHistory" value="30"/>

<property name="logging.maxFileSize" value="10MB"/>

<property name="logging.totalSizeCap" value="100GB"/>

<property name="logging.pattern"

<!--日志脱敏 -->

<conversionRule conversionWord="msg" converterClass="com.dcits.comet.log.SensitiveDataConverter"/>

<!--输出到控制台-->

<appender name="console" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>#%d{yyyy-MM-dd HH:mm:ss.SSS} [${spring.application.name}]

[%ip:${port},%X{channelSeqNo},%X{jobRunId},%X{stepRunId}] [%X{serviceCode}-%X{messageType}-%X{m

essageCode}] [%thread] %-5level %logger{50} %line - %msg%n

</pattern>

<charset>UTF-8</charset>

</encoder>

</appender>

<!--输出到文件-->

<appender name="file" class="ch.qos.logback.core.rolling.RollingFileAppender">

<rollingPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedRollingPolicy">

<fileNamePattern>

${logging.path}/${spring.application.name}/%d{yyyyMMdd}/%d{yyyyMMdd}.%i.ensemble-cif-service.log

</fileNamePattern>

<maxHistory>${logging.maxHistory}</maxHistory>

<maxFileSize>${logging.maxFileSize}</maxFileSize>

<totalSizeCap>${logging.totalSizeCap}</totalSizeCap>

</rollingPolicy>

<encoder class="ch.qos.logback.core.encoder.LayoutWrappingEncoder">

<layout class="org.apache.skywalking.apm.toolkit.log.logback.v1.x.TraceIdPatternLogbackLayout">

<pattern>${logging.pattern}</pattern>

</layout>

</encoder>

</appender>

<root level="info">

<appender-ref ref="console"/>

<appender-ref ref="file"/>

</root>

<logger name="ShardingSphere-SQL" level="error"/>

<logger name="springfox.documentation.spring" level="warn"/>

<logger name="org.springframework" level="warn"/>

<logger name="org.mybatis.spring" level="info"/>

<logger name="org.apache.catalina" level="warn"/>

<logger name="com.netflix" level="warn"/>

<logger name="com.dcits.comet" level="debug"/>

<logger name="com.dcits.galaxy.base.data.BeanResult" level="debug"/>

<logger name="com.dcits.ensemble.cif.service.CifServiceApplication" level="debug"/>

<logger name="com.dcits.ensemble" level="debug"/>

<logger name="com.dcits.gravity" level="warn"/>

<logger name="com.dcits.comet" level="debug"/>

<logger name="com.dcits.comet.flow.ExecutorFlow" level="info"/>

<logger name="com.dcits.comet.flow.antirepeate" level="error"/>

<logger name="com.dcits.comet.commons.exception.ExceptionContainer" level="error"/>

<logger name="com.dcits.comet.mq" level="error"/>

<logger name="com.dcits.comet.notice.core.scheduled" level="error"/>

<logger name="com.dcits.comet.territory" level="error"/>

<logger name="com.dcits.comet.dao.interceptor" level="error"/>

<logger name="com.dcits.jupiter.webmvc.support.filter.TraceFilter" level="error"/>

<logger name="org.springframework.jdbc.datasource.DataSourceTransactionManager" level="debug"/>

</configuration>*注意:由于输出日志中要打印skywalking调用链的traceId,所以在启动时必须如上述第一章节所示,要给服务启动时添加skywalking相关的启动参数才可以。本地启动服务,console控制台中可以不输出traceId,可以不添加相关的启动参数。 **

(3)修改logstash的grok配置

logstash的grok配置:

shell

grok {

match => { "message" => "\#%{TIMESTAMP_ISO8601:logdate}\s*\[%{DATA:springApplicationName}\]\s*\[%{IP:ip}\:%{NUMBER:port}\,TID:%{DATA:tId}\,(?<channelSeqNo>[A-Za-z0

-9]*)\,(?<jobRunId>[A-Za-z0-9]*)\,(?<stepRunId>[A-Za-z0-9]*)\]\s*\[(?<serviceCode>[A-z]*)\-(?<messageType>[0-9]*)\-(?<messageCode>[0-9]*)\]\s*\[(?<threadId>[-A-z0-9 ]+)\]\s*%{LOGLEVEL:logLevel}\s*" }修改完成之后启动项目,带项目启动成功之后根据运维平台的不受手册,依次启动 elasticsearch-7.6.2、logstash、filebeat带日志采集到ES之后,在运维平台的链路追踪菜单的链路列表中,点击日志按钮之后即可查询到链路的相关日志。

1.3.skywalking的prometheus监控视图

所监控的微服务应用需要集成jupiter-metricses包

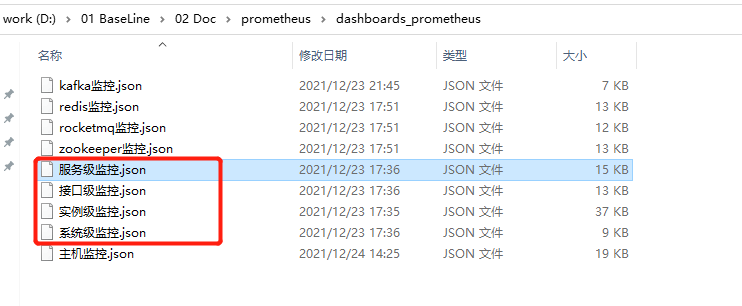

xml<dependency> <artifactId>jupiter-metricses</artifactId> <groupId>com.dcits.jupiter</groupId> </dependency>oms平台提供系统级、服务级、实例级、接口级监控视图,需用户在监控看板页面导入相应的看板文件,看板文件如下图所示

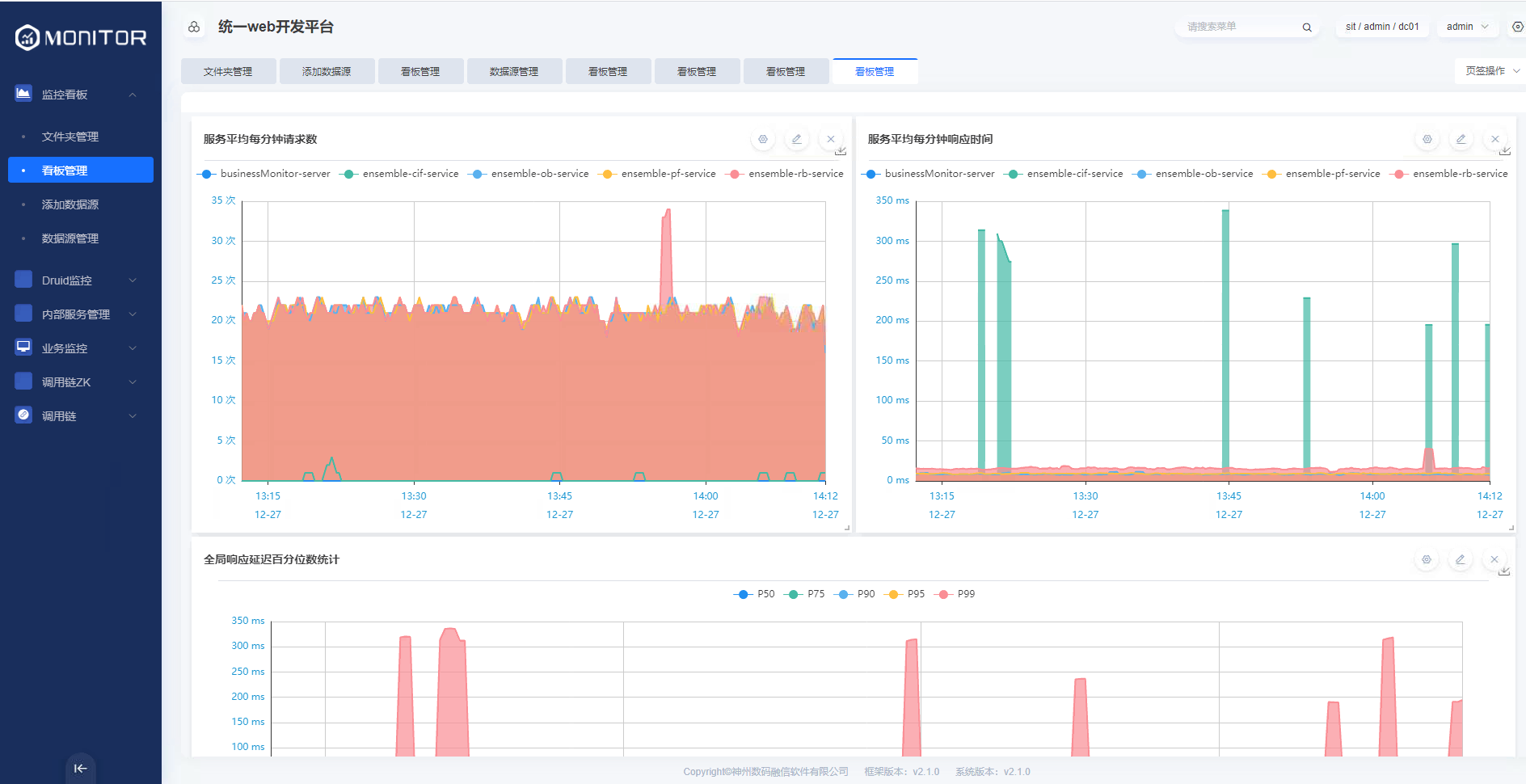

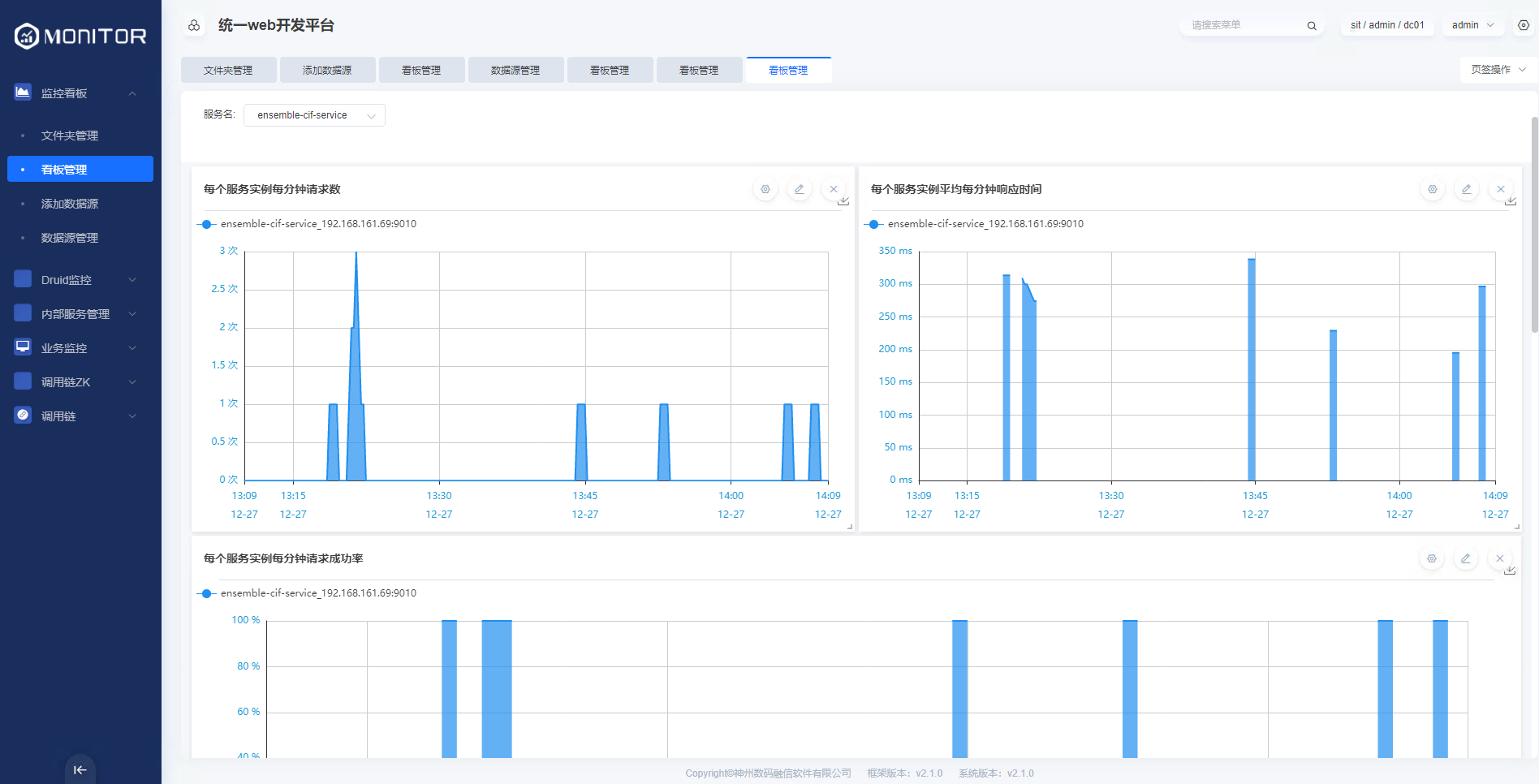

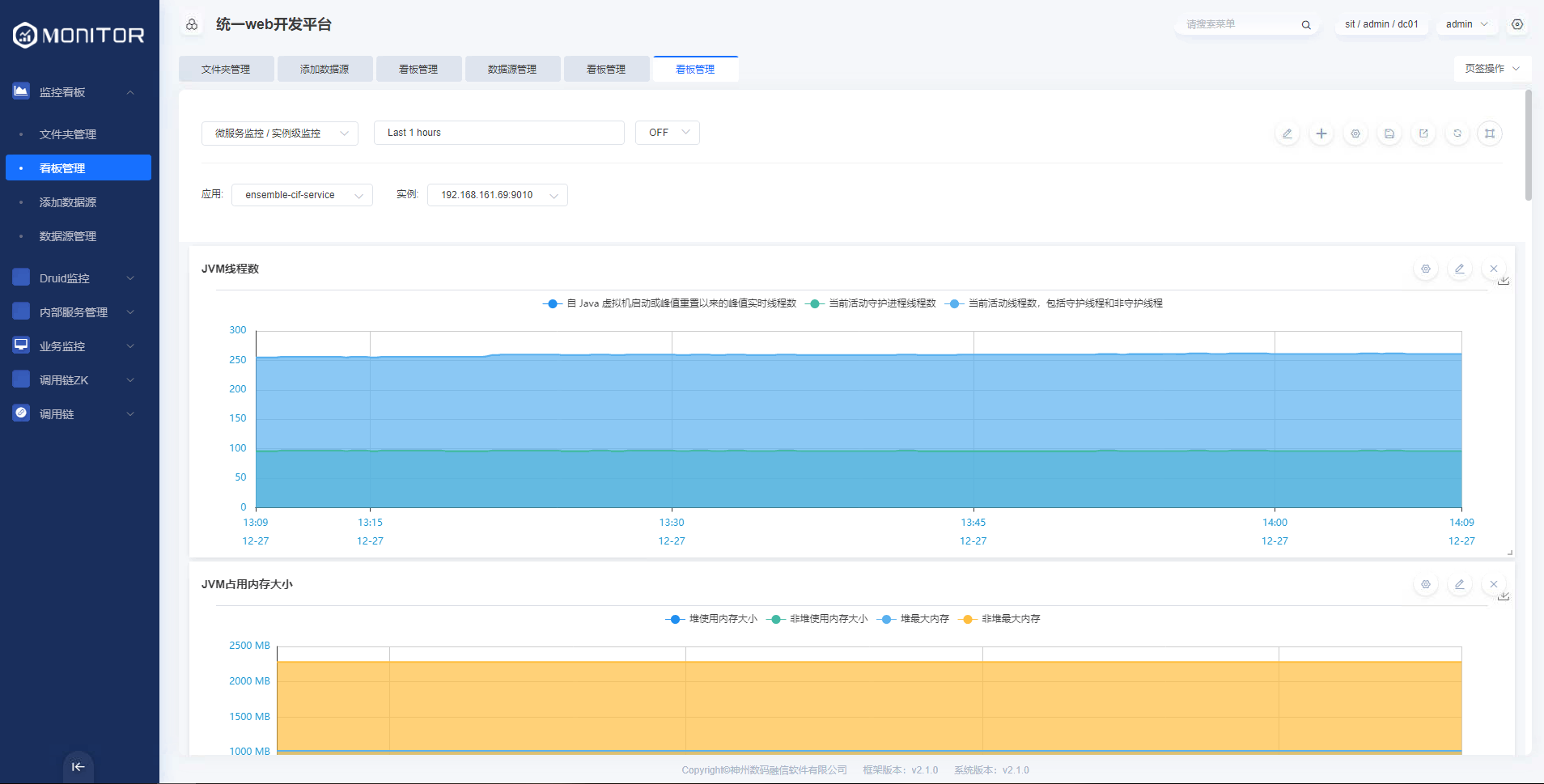

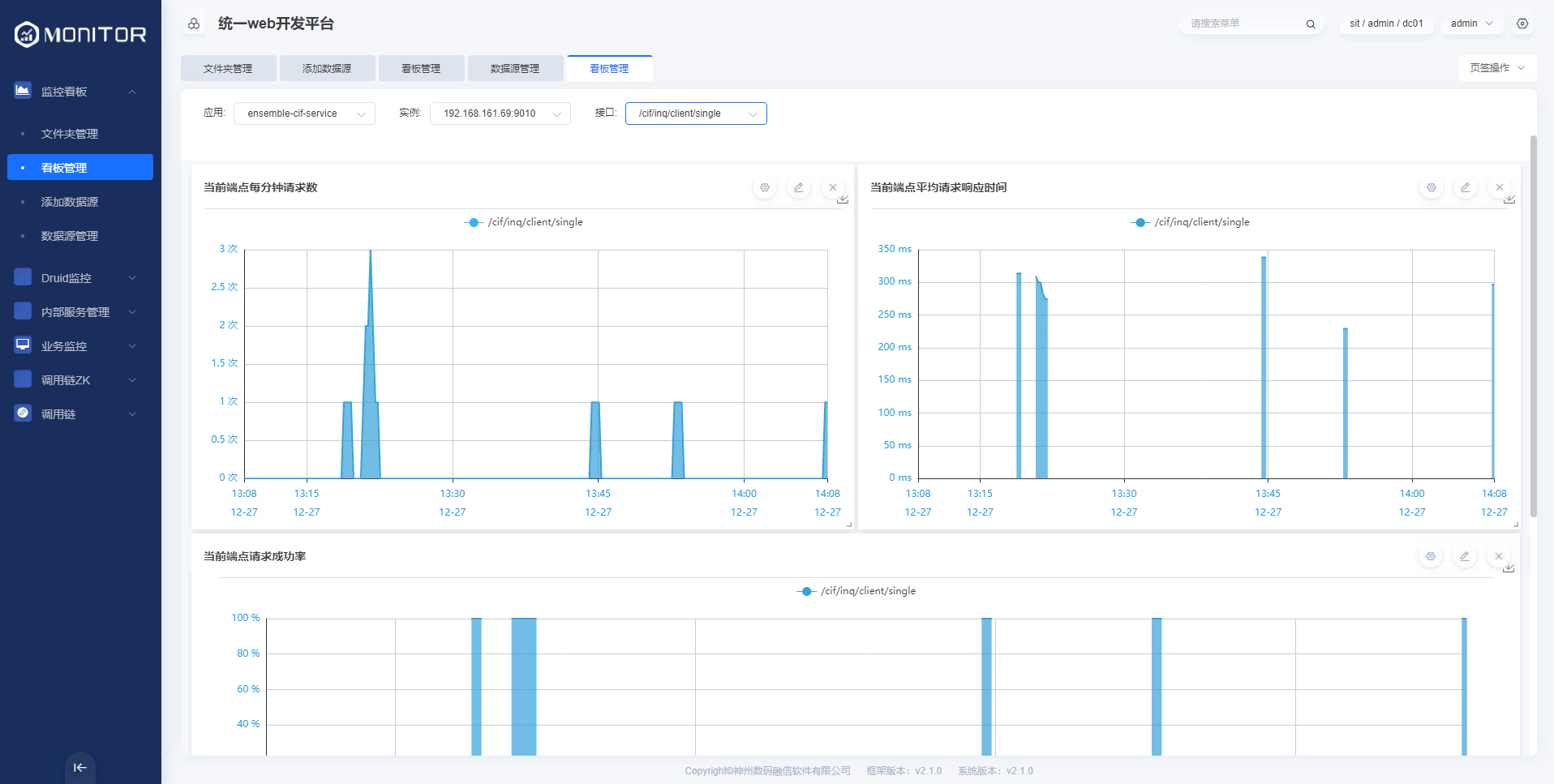

导入看板文件后即可查看相应的监控视图:

系统级监控:

服务级监控:

实例级监控:

接口级监控:

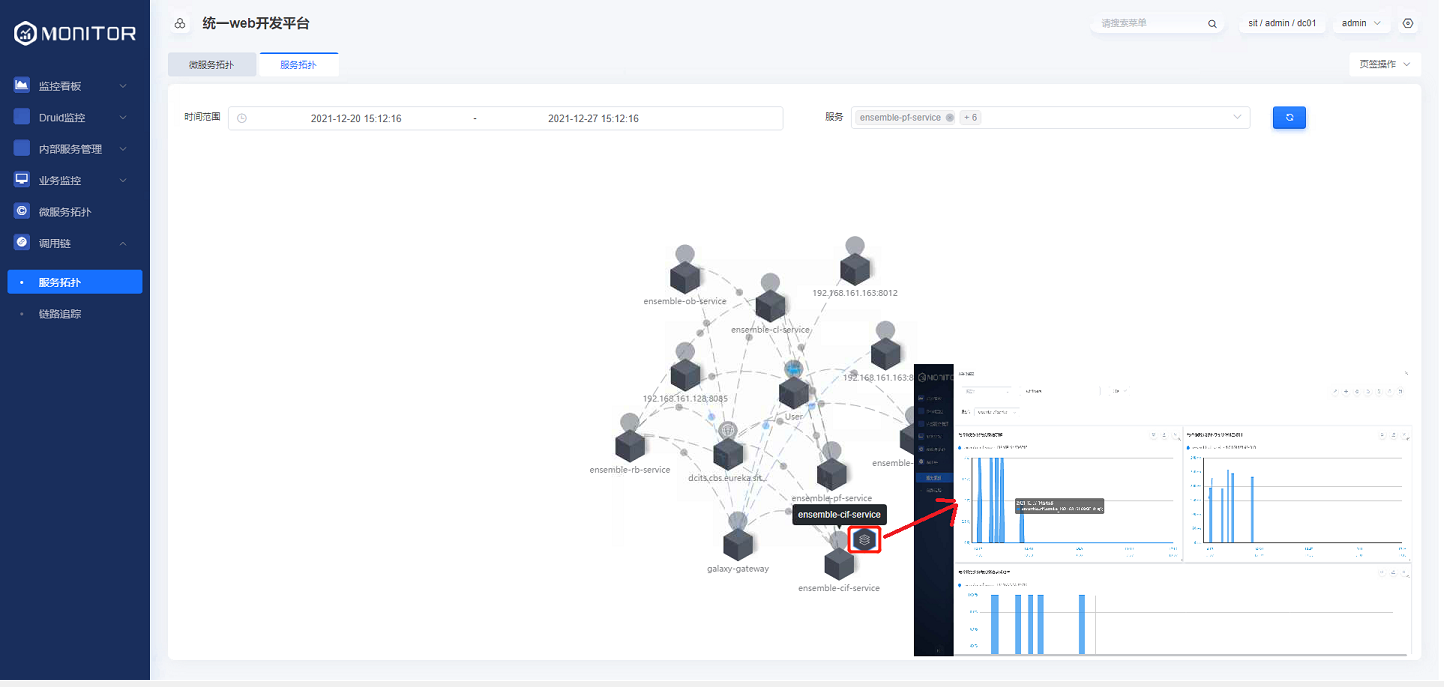

skywalking拓扑图跳转服务级监控