Appearance

Logstash部署

1.1logstash单点部署

1.1.1.上传安装包至安装路径下

1.1.2.解压安装包

unzip logstash-7.6.2.zip1.1.3.修改配置文件galaxy-online.conf

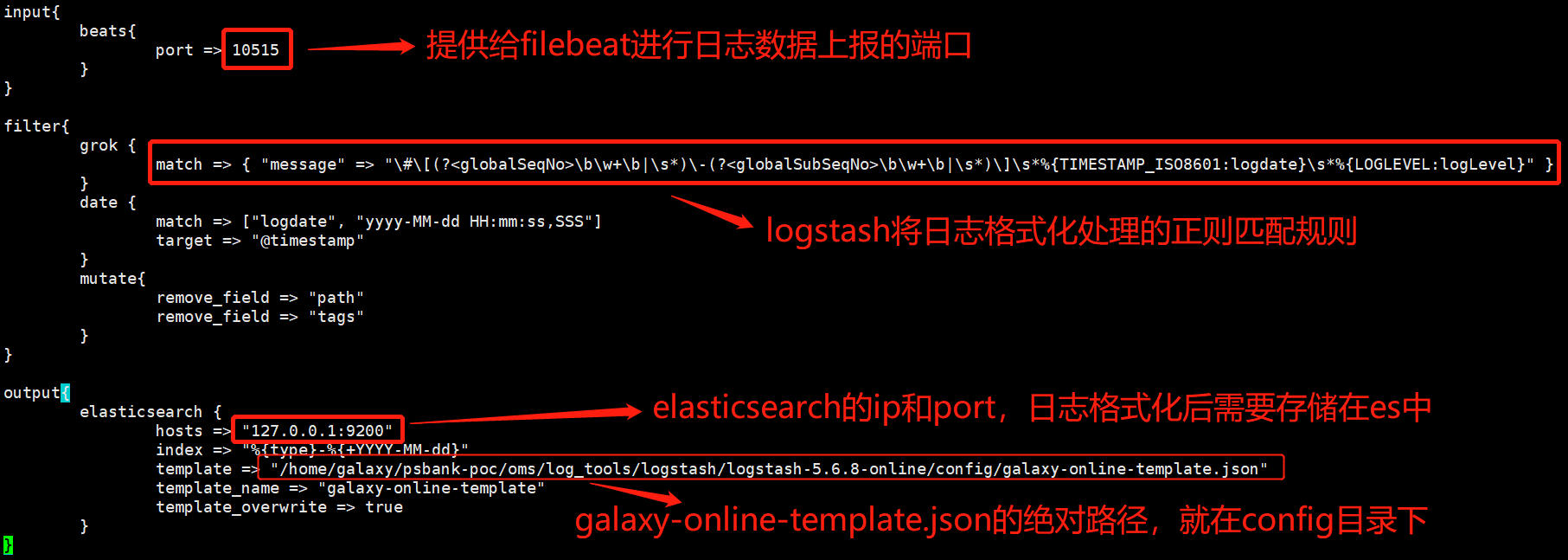

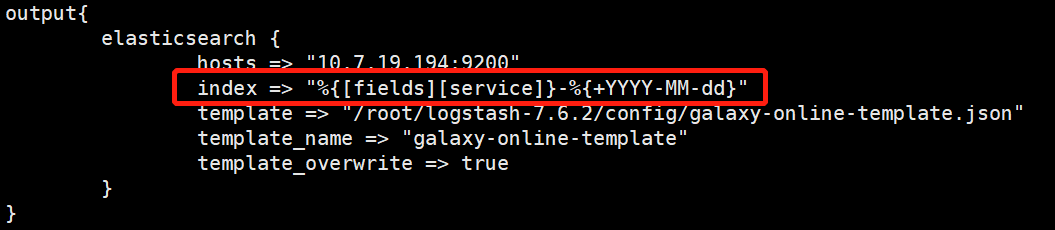

该配置文件在logstash安装目录的config下,需要修改的内容见下图:

如果es为集群,则hosts配置多个es地址,使用逗号隔开即可,参考如下配置:

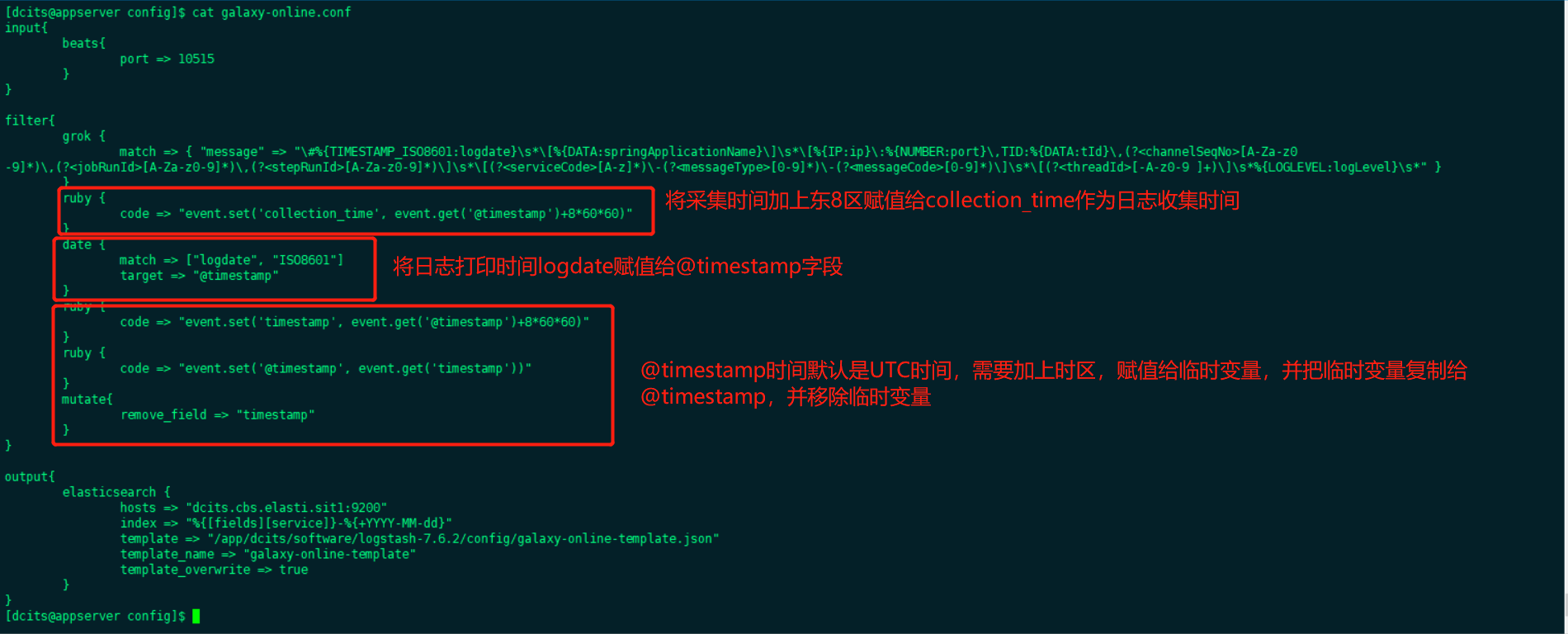

针对filter的配置,提供如下配置:

json

filter{

grok {

match => { "message" => "\#%{TIMESTAMP_ISO8601:logdate}\s*\[%{DATA:springApplicationName}\]\s*\[%{IP:ip}\:%{NUMBER:port}\,TID:%{DATA:tId}\,(?<channelSeqNo>[A-Za-z0-9]*)\, (?<jobRunId>[A-Za-z0-9]*)\, (?<stepRunId>[A-Za-z0-9]*)\]\s*\[(?<serviceCode>[A-z]*)\-(?<messageType>[0-9]*)\-(?<messageCode>[0-9]*)\]\s*\[(?<threadId>[-A-z0-9]+)\]\s*%{LOGLEVEL: logLevel}\s*"}

}

ruby {

code => "event.set('collection_time', event.get('@timestamp')+8*60*60)"

}

date {

match => ["logdate", "ISO8601"]

target => "@timestamp"

}

ruby {

code => "event.set('timestamp', event.get('@timestamp')+8*60*60)"

}

ruby {

code => "event.set('@timestamp', event.get('timestamp'))"

}

mutate{

remove_field => "timestamp"

}

}前面提到的filebeat版本升级导致document_type配置项不可用,替换为fields.service配置项,对应的logstash需要调整问题,现在进行解答。如下图,该配置项作如下调整,其他配置项的配置方式不变。

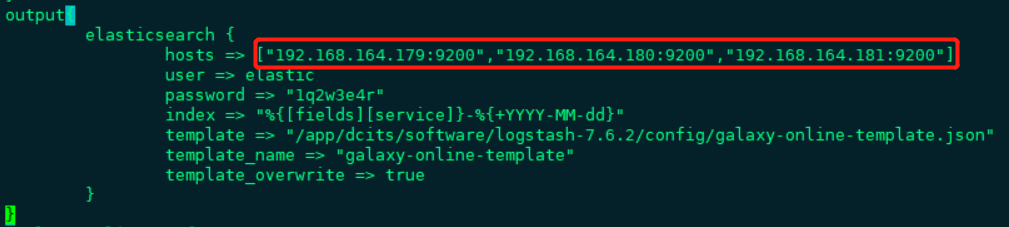

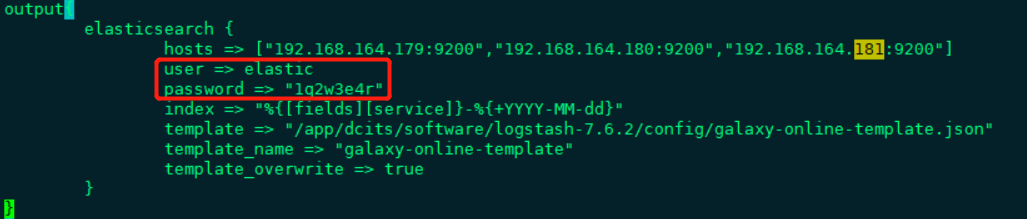

若es配置了密码,则output配置参考如下图:

关于日志格式化处理所涉及的正则匹配规则,明确说明以下几点:

匹配的原则就是正则表达式,使用人员如果有较强的正则表达式基础,完全可以自行按照应用所输出的日志格式,完善此处的匹配规则。

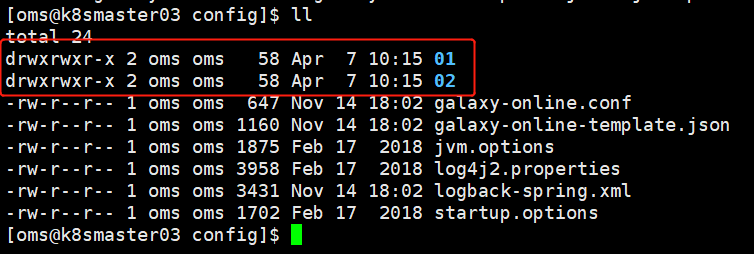

此处提供了两套,应用日志输出格式文件(logback.xml) 和对应的logstash的galaxy-online.conf如何配置匹配规则的配套文件,使用者亦可以参考。就在config目录下的01和02目录下。

匹配规则无法定死,是随业务应用实际日志输出格式变化而变化的,01和02目录即曾经使用过的日志输出格式而整理的配套文件。

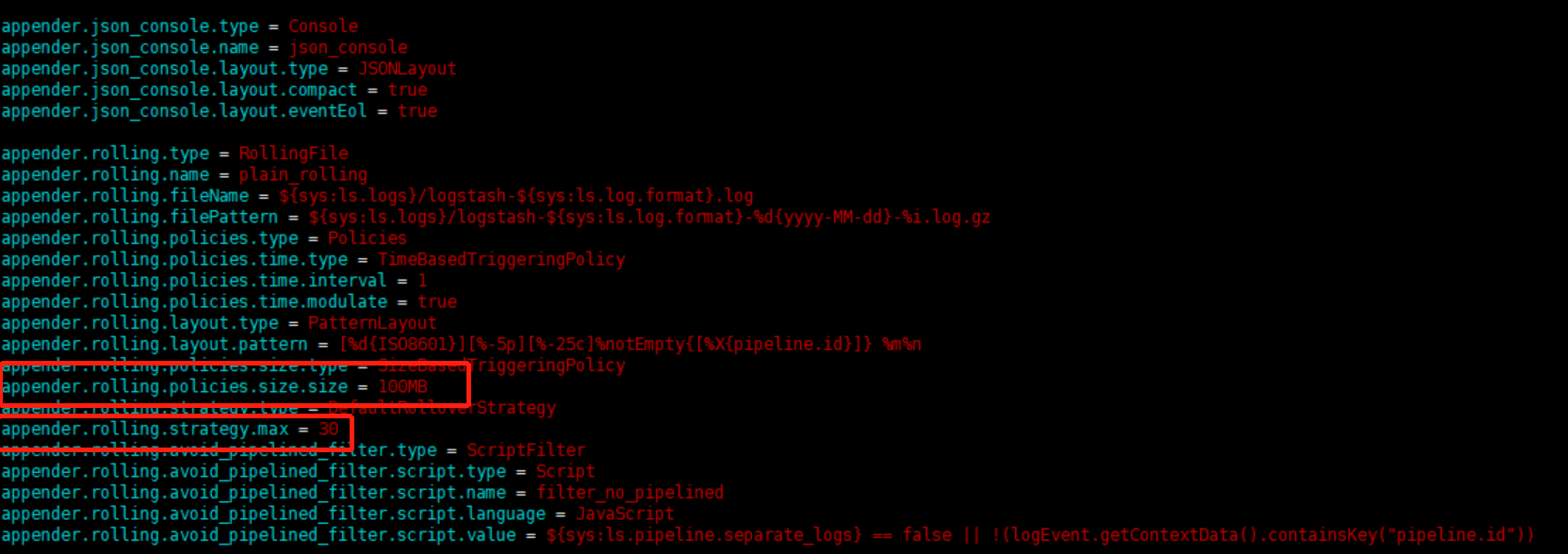

关于logstash运行日志的配置(可选配置)

进入到logstash安装目录的config文件夹下,使用命令打开 log4j2.properties文件

shell

vim log4j2.properties

主要可修改的配置是:

yaml

appender.rolling.policies.size.size=100MB

appender.rolling.strategy.max = 20

#这两个属性表示,logstash输出运行日志的时候到了100MB就会压缩,最多保留30个压缩包,再有就丢弃旧的压缩包,可根据自己需求去修改这个配置1.1.4.授权

进入logstash安装目录下,执行如下命令对vendor目录授权:

shell

chmod 755 -R vendor/进入bin目录下,执行如下命令授权:

shell

chmod 755 -R logstash1.1.5.启动

提供以下几种其中方式

(1)进入bin目录,执行如下命令:

shell

./logstash -f ../config/galaxy-online.conf &或者

shell

nohup ./logstash -f ../config/galaxy-online.conf >logstash.log &(2)在logstash安装目录下,执行如下命令

shell

./start.sh1.1.6.校验服务

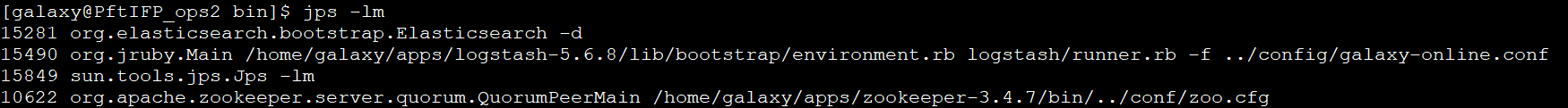

执行命令:jps -lm;

或直接检测端口占用情况。

1.2.logstash高可用部署

Logstash多节点部署,filebeat使用其做负载多节点处理日志数据。

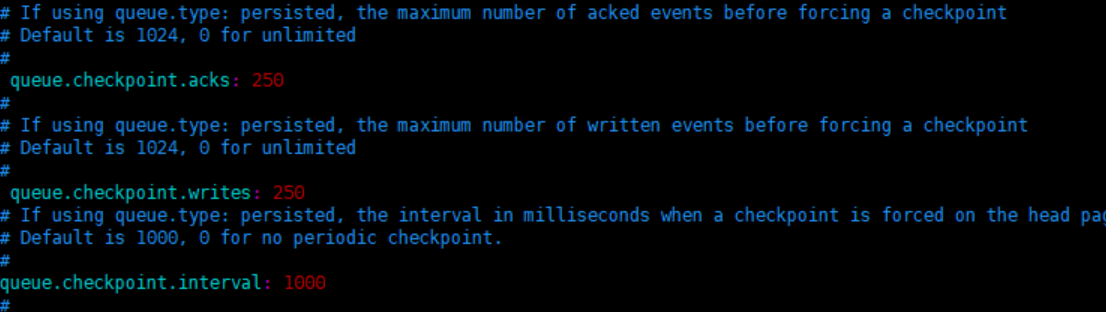

参考上面章节中的Logstash部署,在多台服务上部署Logstash并运行,日志量过大,如果没有开启本地磁盘持久化操作,logstash限制了一部分filebeat的日志数据传输,导致数据有丢失的现象,可通过修改logstash.yml文件将数据持久化到磁盘中

queue.type: persisted

path.queue: /usr/share/logstash/data #队列存储路径;如果队列类型为persisted,则生效

queue.page_capacity: 50mb #队列为持久化,单个队列大小

queue.max_events: 0 #当启用持久化队列时,队列中未读事件的最大数量,0为不限制

queue.max_bytes: 250mb #队列最大容量

queue.checkpoint.acks: 250 #在启用持久队列时强制执行检查点的最大数量,0为不限制

queue.checkpoint.writes: 250 #在启用持久队列时强制执行检查点之前的最大数量的写入事件,0为不限制

queue.checkpoint.interval: 1000 #当启用持久队列时,在头页面上强制一个检查点的时间间隔

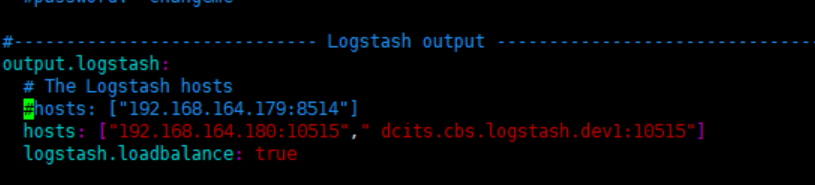

修改filebeat.yml文件:

output.logstash.host: logstash的多节点地址

添加配置: logstash.loadbalance: true

1.3实例日志采集配置

日志采集及查看 使用的是 filebeat 采集、logstash 整理、Elasticsearch 存储的方案。 实例日志查看功能 延续原有的日志采集方式,在此基础上要求应用在部署时需要在日志配置文件中将实例 ip、port信息作为日志内容进行展示。

1.3.1核心业务系统使用的ELK配置模板

filebeat采集端配置:

yaml

- type: log

enabled: true

fields:

service: galaxy-online

paths:

- /app/dcits/logs/ensemble-cif-service/*/*/*.log

multiline.pattern: ^\#

multiline.negate: true

multiline.match: after

- type: log

enabled: true

fields:

service: galaxy-online

paths:

- /app/dcits/logs/ensemble-cl-service/*/*/*.log

multiline.pattern: ^\#

multiline.negate: true

multiline.match: after

- type: log

enabled: true

fields:

service: galaxy-online

paths:

- /app/dcits/logs/ensemble-gl-service/*/*/*.log

multiline.pattern: ^\#

multiline.negate: true

multiline.match: after

- type: log

enabled: true

fields:

service: galaxy-online

paths:

- /app/dcits/logs/ensemble-ob-service/*/*/*.log

multiline.pattern: ^\#

multiline.negate: true

multiline.match: after

- type: log

enabled: true

fields:

service: galaxy-online

paths:

- /app/dcits/logs/ensemble-pf-service/*/*/*.log

multiline.pattern: ^\#

multiline.negate: true

multiline.match: after

- type: log

enabled: true

fields:

service: galaxy-online

paths:

- /app/dcits/logs/ensemble-rb-service/*/*/*.log

multiline.pattern: ^\#

multiline.negate: true

multiline.match: after

- type: log

enabled: true

fields:

service: galaxy-online

paths:

- /app/dcits/logs/ensemble-tae-service/*/*/*.log

multiline.pattern: ^\#

multiline.negate: true

multiline.match: afterlogback.xml的pattern配置:

<property name="logging.pattern"

value="#%d{yyyy-MM-dd HH:mm:ss.SSS} [${spring.application.name}] [%ip:${port},%tid,%X{channelSeqNo},%X{jobRunId},%X{stepRunId}] [%X{serviceCode}-%X{messageType}-%X{m

essageCode}] [%thread] %-5level %logger{50} %line - %msg%n"/>logstash的grok配置:

grok {

match => { "message" => "\#%{TIMESTAMP_ISO8601:logdate}\s*\[%{DATA:springApplicationName}\]\s*\[%{IP:ip}\:%{NUMBER:port}\,TID:%{DATA:tId}\,(?<channelSeqNo>[A-Za-z0

-9]*)\,(?<jobRunId>[A-Za-z0-9]*)\,(?<stepRunId>[A-Za-z0-9]*)\]\s*\[(?<serviceCode>[A-z]*)\-(?<messageType>[0-9]*)\-(?<messageCode>[0-9]*)\]\s*\[(?<threadId>[-A-z0-9 ]+)\]\s*%{LOGLEVEL:logLevel}\s*" }

}logback中的日志输出的Pattern与logstash的grok格式和字段要对应匹配。

配置实例:

1、联机交易(cif)logback.xml配置

<property name="COMET_LOG_PATTERN"

value="#%d{yyyy-MM-dd HH:mm:ss.SSS} [${serviceName}] [%ip:${port},%X{jobRunId},%X{stepRunId}] [%thread] %-5level %logger{50} %line - %msg%n"/>2、批量交易(batch)logback.xml配置

shell

<property name="COMET_LOG_PATTERN"

value="#%d{yyyy-MM-dd HH:mm:ss.SSS} [${serviceName}] [%ip:${port},%X{jobId},%X{jobTaskId}] [%thread] %-5level %logger{50} %line - %msg%n"/>logstash的grok配置:

1、联机交易(cif)grok配置

xml

grok {

match => { "message" => "\#%{TIMESTAMP_ISO8601:logdate}\s*\[%{DATA:springApplicationName}\]\s*\[%{IP:ip}\:%{NUMBER:port}\,TID:%{DATA:tId}\,(?

<channelSeqNo>[A-Za-z0-9]*)\]\s*\[(?

<serviceCode>[A-z]*)\-(?

<messageType>[0-9]*)\-(?

<messageCode>[0-9]*)\]\s*\[(?

<threadId>[-A-z0-9 ]+)\]\s*%{LOGLEVEL:logLevel}\s*" }

}2、批量交易(batch)grok配置

grok {

match => { "message" => "\#%{TIMESTAMP_ISO8601:logdate}\s*\[%{DATA:springApplicationName}\]\s*\[%{IP:ip}\:%{NUMBER:port}\,(?<jobId>[A-Za-z0-9]*)\,(?<jobTaskId>[A-Za-z0-9]*)\]\s*\[(?<threadId>[-A-z0-9 ]+)\]\s*%{LOGLEVEL:logLevel}\s*" }

}1.3.2Grok常用正则表达式

yaml

USERNAME [a-zA-Z0-9._-]+

USER %{USERNAME}

EMAILLOCALPART [a-zA-Z][a-zA-Z0-9_.+-=:]+

EMAILADDRESS %{EMAILLOCALPART}@%{HOSTNAME}

INT (?:[+-]?(?:[0-9]+))

BASE10NUM (?<![0-9.+-])(?>[+-]?(?:(?:[0-9]+(?:\.[0-9]+)?)|(?:\.[0-9]+)))

NUMBER (?:%{BASE10NUM})

BASE16NUM (?<![0-9A-Fa-f])(?:[+-]?(?:0x)?(?:[0-9A-Fa-f]+))

BASE16FLOAT \b(?<![0-9A-Fa-f.])(?:[+-]?(?:0x)?(?:(?:[0-9A-Fa-f]+(?:\.[0-9A-Fa-f]*)?)|(?:\.[0-9A-Fa-f]+)))\b

POSINT \b(?:[1-9][0-9]*)\b

NONNEGINT \b(?:[0-9]+)\b

WORD \b\w+\b

NOTSPACE \S+

SPACE \s*

DATA .*?

GREEDYDATA .*

QUOTEDSTRING (?>(?<!\\)(?>"(?>\\.|[^\\"]+)+"|""|(?>'(?>\\.|[^\\']+)+')|''|(?>`(?>\\.|[^\\`]+)+`)|``))

UUID [A-Fa-f0-9]{8}-(?:[A-Fa-f0-9]{4}-){3}[A-Fa-f0-9]{12}

# URN, allowing use of RFC 2141 section 2.3 reserved characters

URN urn:[0-9A-Za-z][0-9A-Za-z-]{0,31}:(?:%[0-9a-fA-F]{2}|[0-9A-Za-z()+,.:=@;$_!*'/?#-])+

# Networking

MAC (?:%{CISCOMAC}|%{WINDOWSMAC}|%{COMMONMAC})

CISCOMAC (?:(?:[A-Fa-f0-9]{4}\.){2}[A-Fa-f0-9]{4})

WINDOWSMAC (?:(?:[A-Fa-f0-9]{2}-){5}[A-Fa-f0-9]{2})

COMMONMAC (?:(?:[A-Fa-f0-9]{2}:){5}[A-Fa-f0-9]{2})

IPV6 ((([0-9A-Fa-f]{1,4}:){7}([0-9A-Fa-f]{1,4}|:))|(([0-9A-Fa-f]{1,4}:){6}(:[0-9A-Fa-f]{1,4}|((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3})|:))|(([0-9A-Fa-f]{1,4}:){5}(((:[0-9A-Fa-f]{1,4}){1,2})|:((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3})|:))|(([0-9A-Fa-f]{1,4}:){4}(((:[0-9A-Fa-f]{1,4}){1,3})|((:[0-9A-Fa-f]{1,4})?:((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3}))|:))|(([0-9A-Fa-f]{1,4}:){3}(((:[0-9A-Fa-f]{1,4}){1,4})|((:[0-9A-Fa-f]{1,4}){0,2}:((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3}))|:))|(([0-9A-Fa-f]{1,4}:){2}(((:[0-9A-Fa-f]{1,4}){1,5})|((:[0-9A-Fa-f]{1,4}){0,3}:((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3}))|:))|(([0-9A-Fa-f]{1,4}:){1}(((:[0-9A-Fa-f]{1,4}){1,6})|((:[0-9A-Fa-f]{1,4}){0,4}:((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3}))|:))|(:(((:[0-9A-Fa-f]{1,4}){1,7})|((:[0-9A-Fa-f]{1,4}){0,5}:((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3}))|:)))(%.+)?

IPV4 (?<![0-9])(?:(?:[0-1]?[0-9]{1,2}|2[0-4][0-9]|25[0-5])[.](?:[0-1]?[0-9]{1,2}|2[0-4][0-9]|25[0-5])[.](?:[0-1]?[0-9]{1,2}|2[0-4][0-9]|25[0-5])[.](?:[0-1]?[0-9]{1,2}|2[0-4][0-9]|25[0-5]))(?![0-9])

IP (?:%{IPV6}|%{IPV4})

HOSTNAME \b(?:[0-9A-Za-z][0-9A-Za-z-]{0,62})(?:\.(?:[0-9A-Za-z][0-9A-Za-z-]{0,62}))*(\.?|\b)

IPORHOST (?:%{IP}|%{HOSTNAME})

HOSTPORT %{IPORHOST}:%{POSINT}

# paths

PATH (?:%{UNIXPATH}|%{WINPATH})

UNIXPATH (/([\w_%!$@:.,+~-]+|\\.)*)+

TTY (?:/dev/(pts|tty([pq])?)(\w+)?/?(?:[0-9]+))

WINPATH (?>[A-Za-z]+:|\\)(?:\\[^\\?*]*)+

URIPROTO [A-Za-z]([A-Za-z0-9+\-.]+)+

URIHOST %{IPORHOST}(?::%{POSINT:port})?

# uripath comes loosely from RFC1738, but mostly from what Firefox

# doesn't turn into %XX

URIPATH (?:/[A-Za-z0-9$.+!*'(){},~:;=@#%&_\-]*)+

#URIPARAM \?(?:[A-Za-z0-9]+(?:=(?:[^&]*))?(?:&(?:[A-Za-z0-9]+(?:=(?:[^&]*))?)?)*)?

URIPARAM \?[A-Za-z0-9$.+!*'|(){},~@#%&/=:;_?\-\[\]<>]*

URIPATHPARAM %{URIPATH}(?:%{URIPARAM})?

URI %{URIPROTO}://(?:%{USER}(?::[^@]*)?@)?(?:%{URIHOST})?(?:%{URIPATHPARAM})?

# Months: January, Feb, 3, 03, 12, December

MONTH \b(?:[Jj]an(?:uary|uar)?|[Ff]eb(?:ruary|ruar)?|[Mm](?:a|ä)?r(?:ch|z)?|[Aa]pr(?:il)?|[Mm]a(?:y|i)?|[Jj]un(?:e|i)?|[Jj]ul(?:y)?|[Aa]ug(?:ust)?|[Ss]ep(?:tember)?|[Oo](?:c|k)?t(?:ober)?|[Nn]ov(?:ember)?|[Dd]e(?:c|z)(?:ember)?)\b

MONTHNUM (?:0?[1-9]|1[0-2])

MONTHNUM2 (?:0[1-9]|1[0-2])

MONTHDAY (?:(?:0[1-9])|(?:[12][0-9])|(?:3[01])|[1-9])

# Days: Monday, Tue, Thu, etc...

DAY (?:Mon(?:day)?|Tue(?:sday)?|Wed(?:nesday)?|Thu(?:rsday)?|Fri(?:day)?|Sat(?:urday)?|Sun(?:day)?)

# Years?

YEAR (?>\d\d){1,2}

HOUR (?:2[0123]|[01]?[0-9])

MINUTE (?:[0-5][0-9])

# '60' is a leap second in most time standards and thus is valid.

SECOND (?:(?:[0-5]?[0-9]|60)(?:[:.,][0-9]+)?)

TIME (?!<[0-9])%{HOUR}:%{MINUTE}(?::%{SECOND})(?![0-9])

# datestamp is YYYY/MM/DD-HH:MM:SS.UUUU (or something like it)

DATE_US %{MONTHNUM}[/-]%{MONTHDAY}[/-]%{YEAR}

DATE_EU %{MONTHDAY}[./-]%{MONTHNUM}[./-]%{YEAR}

ISO8601_TIMEZONE (?:Z|[+-]%{HOUR}(?::?%{MINUTE}))

ISO8601_SECOND (?:%{SECOND}|60)

TIMESTAMP_ISO8601 %{YEAR}-%{MONTHNUM}-%{MONTHDAY}[T ]%{HOUR}:?%{MINUTE}(?::?%{SECOND})?%{ISO8601_TIMEZONE}?

DATE %{DATE_US}|%{DATE_EU}

DATESTAMP %{DATE}[- ]%{TIME}

TZ (?:[APMCE][SD]T|UTC)

DATESTAMP_RFC822 %{DAY} %{MONTH} %{MONTHDAY} %{YEAR} %{TIME} %{TZ}

DATESTAMP_RFC2822 %{DAY}, %{MONTHDAY} %{MONTH} %{YEAR} %{TIME} %{ISO8601_TIMEZONE}

DATESTAMP_OTHER %{DAY} %{MONTH} %{MONTHDAY} %{TIME} %{TZ} %{YEAR}

DATESTAMP_EVENTLOG %{YEAR}%{MONTHNUM2}%{MONTHDAY}%{HOUR}%{MINUTE}%{SECOND}

# Syslog Dates: Month Day HH:MM:SS

SYSLOGTIMESTAMP %{MONTH} +%{MONTHDAY} %{TIME}

PROG [\x21-\x5a\x5c\x5e-\x7e]+

SYSLOGPROG %{PROG:program}(?:\[%{POSINT:pid}\])?

SYSLOGHOST %{IPORHOST}

SYSLOGFACILITY <%{NONNEGINT:facility}.%{NONNEGINT:priority}>

HTTPDATE %{MONTHDAY}/%{MONTH}/%{YEAR}:%{TIME} %{INT}

# Shortcuts

QS %{QUOTEDSTRING}

# Log formats

SYSLOGBASE %{SYSLOGTIMESTAMP:timestamp} (?:%{SYSLOGFACILITY} )?%{SYSLOGHOST:logsource} %{SYSLOGPROG}:

# Log Levels

LOGLEVEL ([Aa]lert|ALERT|[Tt]race|TRACE|[Dd]ebug|DEBUG|[Nn]otice|NOTICE|[Ii]nfo|INFO|[Ww]arn?(?:ing)?|WARN?(?:ING)?|[Ee]rr?(?:or)?|ERR?(?:OR)?|[Cc]rit?(?:ical)?|CRIT?(?:ICAL)?|[Ff]atal|FATAL|[Ss]evere|SEVERE|EMERG(?:ENCY)?|[Ee]merg(?:ency)?)官方文档:https://www.elastic.co/guide/en/logstash/7.6/plugins-filters-grok.html